Table of Contents

What is the Definition of Done

The Definition of Done (DoD) is a clear and agreed-upon set of criteria or standards that must be met for a software development task, feature, or user story to be considered complete and ready for release or deployment. It serves as a shared understanding among the team members, stakeholders, and customers about what constitutes a finished and acceptable deliverable.

The purpose of having a Definition of Done is to establish a common understanding and set clear expectations regarding the quality and completeness of work. It helps ensure consistency, transparency, and accountability within the development process. By having a well-defined DoD, the team can minimize misunderstandings, reduce rework, and maintain a high level of product quality.

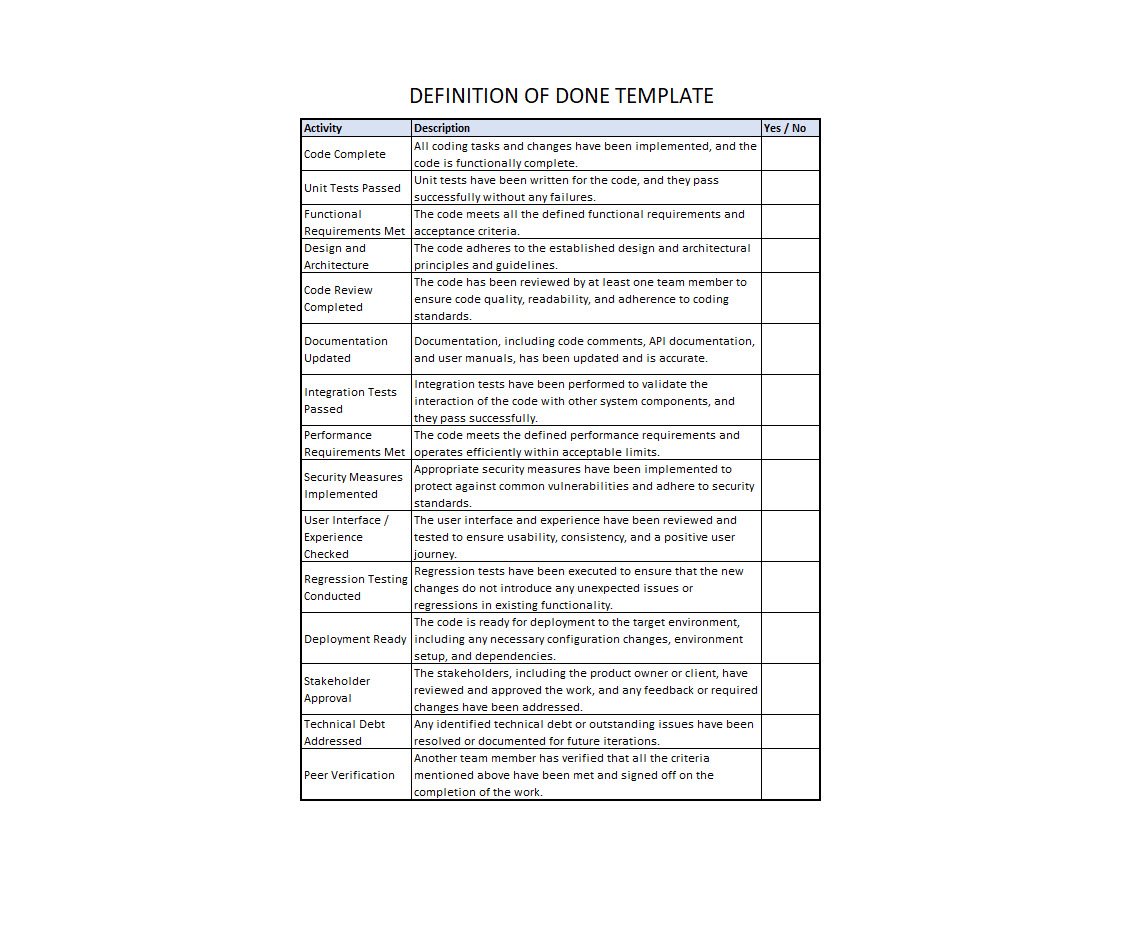

Definition of Done Example

The Definition of Done typically includes various aspects that need to be addressed and validated before considering a task or feature as “done.” These aspects may cover technical, functional, and non-functional requirements.

Some common elements found in a DoD include:

- Code Complete: All coding tasks and changes have been implemented, and the code is functionally complete.

- Unit Tests Passed: Unit tests have been written for the code, and they pass successfully without any failures.

- Functional Requirements Met: The code meets all the defined functional requirements and acceptance criteria.

- Design and Architecture: The code adheres to the established design and architectural principles and guidelines.

- Code Review Completed: The code has been reviewed by at least one team member to ensure code quality, readability, and adherence to coding standards.

- Documentation Updated: Documentation, including code comments, API documentation, and user manuals, has been updated and is accurate.

- Integration Tests Passed: Integration tests have been performed to validate the interaction of the code with other system components, and they pass successfully.

- Performance Requirements Met: The code meets the defined performance requirements and operates efficiently within acceptable limits.

- Security Measures Implemented: Appropriate security measures have been implemented to protect against common vulnerabilities and adhere to security standards.

- User Interface/Experience Checked: The user interface and experience have been reviewed and tested to ensure usability, consistency, and a positive user journey.

- Regression Testing Conducted: Regression tests have been executed to ensure that the new changes do not introduce any unexpected issues or regressions in existing functionality.

- Deployment Ready: The code is ready for deployment to the target environment, including any necessary configuration changes, environment setup, and dependencies.

- Stakeholder Approval: The stakeholders, including the product owner or client, have reviewed and approved the work, and any feedback or required changes have been addressed.

- Technical Debt Addressed: Any identified technical debt or outstanding issues have been resolved or documented for future iterations.

- Peer Verification: Another team member has verified that all the criteria mentioned above have been met and signed off on the completion of the work.

It’s important to note that these items may vary depending on the specific project, organization, or industry. The above sample checklist provides a general outline of items to consider when defining the “Done” criteria for a software development task or project.

Download DoD Templates

Definition of Done Best Practices

The Definition of Done (DoD) best practices can help teams establish effective and reliable criteria for determining when a software development task is considered complete.

Here are some best practices to consider:

- Collaborative Definition: Involve all relevant stakeholders, including developers, testers, product owners, and customers, in defining the DoD. This ensures that everyone has a shared understanding and agreement on the expectations of a completed task.

- Clear and Specific Criteria: Make sure the DoD is clear, specific, and unambiguous. Avoid vague or subjective language that could lead to different interpretations. Use measurable criteria whenever possible to provide objective guidelines.

- Iterative Refinement: Review and refine the DoD regularly based on feedback and lessons learned from previous iterations. The DoD should evolve as the team gains experience and adapts to changing project requirements.

- Tailor to Project Needs: Customize the DoD to suit the specific needs and characteristics of the project, such as industry standards, regulatory compliance, or customer preferences. Avoid a one-size-fits-all approach.

- Realistic and Achievable Standards: Ensure that the criteria in the DoD are realistic and achievable within the project constraints. Strive for a balance between quality and efficiency, considering factors such as time, resources, and technical feasibility.

- Test Automation: Emphasize the use of test automation to streamline and accelerate the testing process. Automated tests can help ensure consistent and repeatable validation of the DoD criteria.

- Continuous Integration and Deployment: Integrate the DoD into a continuous integration and deployment (CI/CD) pipeline. Automate the deployment and validation processes to minimize human error and expedite the delivery of completed tasks.

- Regular Reviews and Audits: Conduct regular reviews and audits to verify compliance with the DoD. This can involve code reviews, testing reviews, or inspections to ensure that the defined criteria are being followed consistently.

- Transparent Communication: Foster open and transparent communication within the team and with stakeholders regarding the DoD. Ensure that everyone understands the importance of meeting the DoD criteria and the implications of incomplete or low-quality work.

- Continuous Improvement: Encourage a culture of continuous improvement by regularly reflecting on the DoD’s effectiveness and seeking feedback from team members. Identify areas for improvement and make adjustments to enhance the quality and efficiency of the development process.

Remember that the best practices for DoD may vary depending on the project, team, and industry context. It’s crucial to adapt and refine the practices to suit your specific needs and continuously evaluate their effectiveness to drive improvements in your development processes.

Let me know in the comments if you are using DoD. How does it work for your team?

2 comments

[…] Download the Definition of Done templates […]

Superb, what a web site it is! This webpage provides helpful information to us, keep it up.

Comments are closed.